|

|

运行环境:pycharm 2022.1

建模的几大步骤:

数据准备、数据预处理,特征工程,建模评估,模型优化

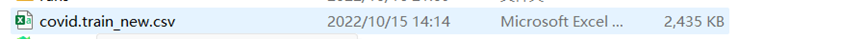

数据准备:

老师给的数据集

数据预处理

1.划分数据集

老师给的数据集只有一个,所以我们需要划分数据集。

# 数据集,验证率,种子

def train_valid_split(data_set, valid_ratio, seed):

'''Split provided training data into training set and validation set'''

valid_set_size = int(valid_ratio * len(data_set))

train_set_size = len(data_set) - valid_set_size

# 划分训练集, 验证集

train_set, valid_set = random_split(data_set, [train_set_size, valid_set_size], generator=torch.Generator().manual_seed(seed))

# 返回np数组

return np.array(train_set), np.array(valid_set)2.先提取目标值和特征值

def select_feat(train_data, valid_data, test_data, select_all=True):

'''Selects useful features to perform regression'''

# 抽取训练集,验证集的最后一列数据作为目标值

y_train, y_valid = train_data[:, -1], valid_data[:, -1]

# 抽取训练集,验证集,测试集的第1到n-1列作为特征值

raw_x_train, raw_x_valid, raw_x_test = train_data[:, :-1], valid_data[:, :-1], test_data

if select_all:

# 如果是全选,特征值的索引是列数0--列数-1

feat_idx = list(range(raw_x_train.shape[1]))

else:

# 如果不是全选,特征值的索引是0--4

feat_idx = [0, 1, 2, 3, 4] # TODO: Select suitable feature columns.

# 返回值是训练集,验证集,测试集的特征值,以及训练集,验证集的目标值

return raw_x_train[:, feat_idx], raw_x_valid[:, feat_idx], raw_x_test[:, feat_idx], y_train, y_valid3.去除异常值

class COVID19Dataset(Dataset):

'''

x: Features.

y: Targets, if none, do prediction.

'''

def __init__(self, x, y=None):

if y is None:

self.y = y

else:

self.y = torch.FloatTensor(y)

self.x = torch.FloatTensor(x)

def __getitem__(self, idx):

if self.y is None:

return self.x[idx]

else:

return self.x[idx], self.y[idx]

def __len__(self):

return len(self.x)4.做特征选择

特征值中并不是所有的特征值对目标值的预测都有用,为了减小计算量,我们筛选出32个与目标值相关性最强的特征值.

# 选取最好的32个特征值

def selected_best(x, y = None):

if y is None:

return x

# 回归任务的标签/功能之间的 F 值

select = SelectKBest(score_func=f_regression, k=32)

z = select.fit_transform(x, y)

# 获取有用特征值的特征

features = select.get_feature_names_out()

print('打印特征名称')

# print(select.get_feature_names_out())

print(z.shape)

return z, features建模评估

1.一个五层神经网络

class My_Model(nn.Module):

def __init__(self, input_dim):

super(My_Model, self).__init__()

# TODO: modify model's structure, be aware of dimensions.

# nn.Sequential可以快速搭建模块

self.layers = nn.Sequential(

# 五个线性层,四个ReLU层

# 五层感知机

nn.Linear(input_dim, 32),

nn.ReLU(),

nn.Linear(32, 8),

nn.ReLU(),

nn.Linear(8, 4),

nn.ReLU(),

nn.Linear(4, 2),

nn.ReLU(),

nn.Linear(2, 1)

)

# forward函数用来进行网络的前向传播,需要传来相应的tensor

def forward(self, x):

x = self.layers(x)

x = x.squeeze(1) # (B, 1) -> (B) 去除size为1的维度

return x2.训练参数

# 设置训练设备,如果GPU能用就用GPU,不能用就用CPU

device = 'cuda' if torch.cuda.is_available() else 'cpu'

# 设置一些训练的参数

config = {

'seed': 5201314, # Your seed number, you can pick your lucky number. :)

'select_all': True, # Whether to use all features.

'valid_ratio': 0.2, # validation_size = train_size * valid_ratio

'n_epochs': 3000, # Number of epochs.

'batch_size': 64,

'learning_rate': 1e-5,

'early_stop': 400, # If model has not improved for this many consecutive epochs, stop training.

'save_path': './models/model.ckpt' # Your model will be saved here.

}3.训练函数

# trainer的参数,训练数据集,验证数据,模型, 配置, 设备

def trainer(train_loader, valid_loader, model, config, device):

# 定义损失函数

criterion = nn.MSELoss(reduction='mean') # Define your loss function, do not modify this.

# Define your optimization algorithm.

# TODO: Please check https://pytorch.org/docs/stable/optim.html to get more available algorithms.

# TODO: L2 regularization (optimizer(weight decay...) or implement by your self).

# 优化器,采用的SGD优化器,使用梯度下降的方法,主要作用是加速模型的收敛,传入的参数有Tensor对象, 学习率,权值衰减

optimizer = torch.optim.SGD(model.parameters(), lr=config['learning_rate'], momentum=0.9)

# 实例化一个SummaryWriter类

writer = SummaryWriter() # Writer of tensoboard.

# 如果项目中没有models这个文件夹,则创建一个

if not os.path.isdir('./models'):

os.mkdir('./models') # Create directory of saving models.

# 设置训练参数

n_epochs, best_loss, step, early_stop_count = config['n_epochs'], math.inf, 0, 0

# 开始训练

for epoch in range(n_epochs):

# 设置需要训练的模型

model.train() # Set your model to train mode.

# 生成损失函数的空列表

loss_record = []

# tqdm is a package to visualize your training progress.

train_pbar = tqdm(train_loader, position=0, leave=True)

# 开始训练

for x, y in train_pbar:

# 优化器的梯度设为0

optimizer.zero_grad() # Set gradient to zero.

# 把需要训练的数据放在GPU或CPU上

x, y = x.to(device), y.to(device) # Move your data to device.

# 获取预测值

pred = model(x)

# 获取预测值和目标值损失函数的值

loss = criterion(pred, y)

loss.backward() # Compute gradient(backpropagation).

# 更新优化器参数

optimizer.step() # Update parameters.

step += 1

# 在损失值得列表中追加这一轮的损失值

loss_record.append(loss.detach().item())

# Display current epoch number and loss on tqdm progress bar.

# 使用tqdm显示出训练过程的进度条

train_pbar.set_description(f'Epoch [{epoch + 1}/{n_epochs}]')

train_pbar.set_postfix({'loss': loss.detach().item()})

# 计算损失值列表的平均损失值

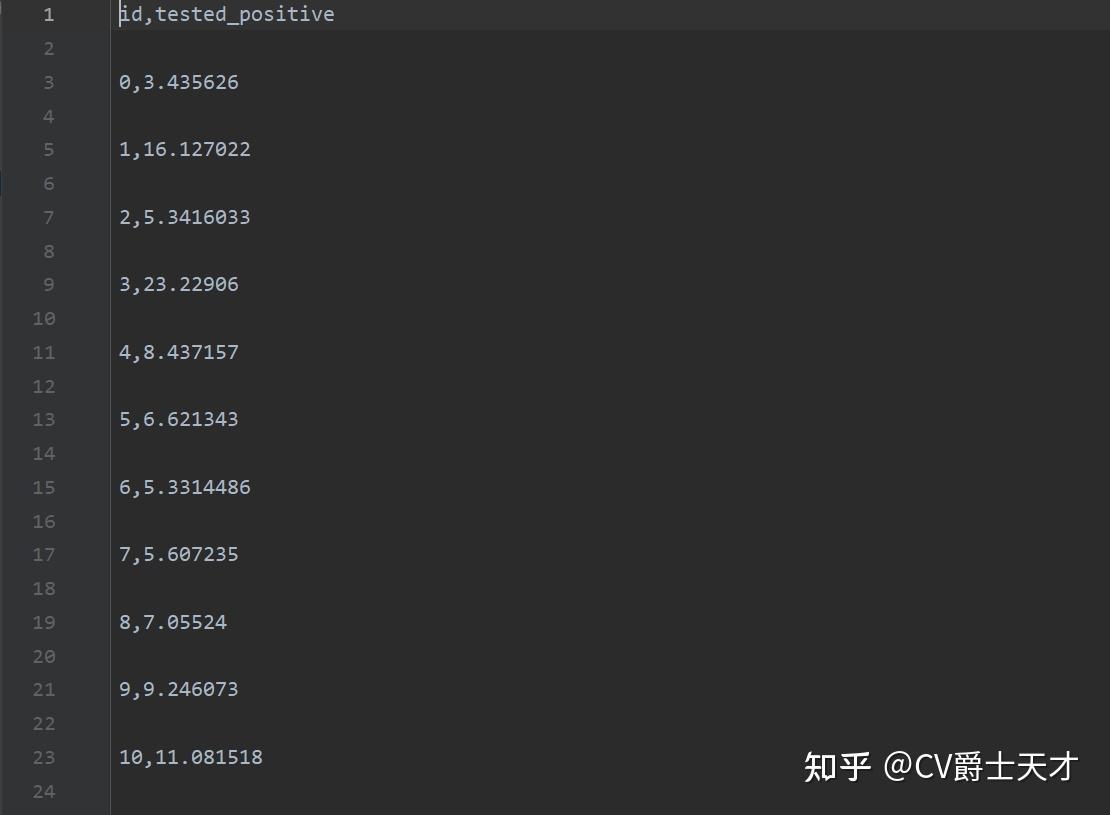

mean_train_loss = sum(loss_record) / len(loss_record)

# 写入Loss/train这个图表中

writer.add_scalar('Loss/train', mean_train_loss, step)

# 设置模型的评估方式

model.eval() # Set your model to evaluation mode.

# 生成损失函数的空列表

loss_record = []

# 验证集开始,过程跟训练集一样

for x, y in valid_loader:

x, y = x.to(device), y.to(device)

# 这一步我也不懂,好像很多模型验证的时候都有

with torch.no_grad():

pred = model(x)

loss = criterion(pred, y)

loss_record.append(loss.item())

mean_valid_loss = sum(loss_record) / len(loss_record)

print(f'Epoch [{epoch + 1}/{n_epochs}]: Train loss: {mean_train_loss:.4f}, Valid loss: {mean_valid_loss:.4f}')

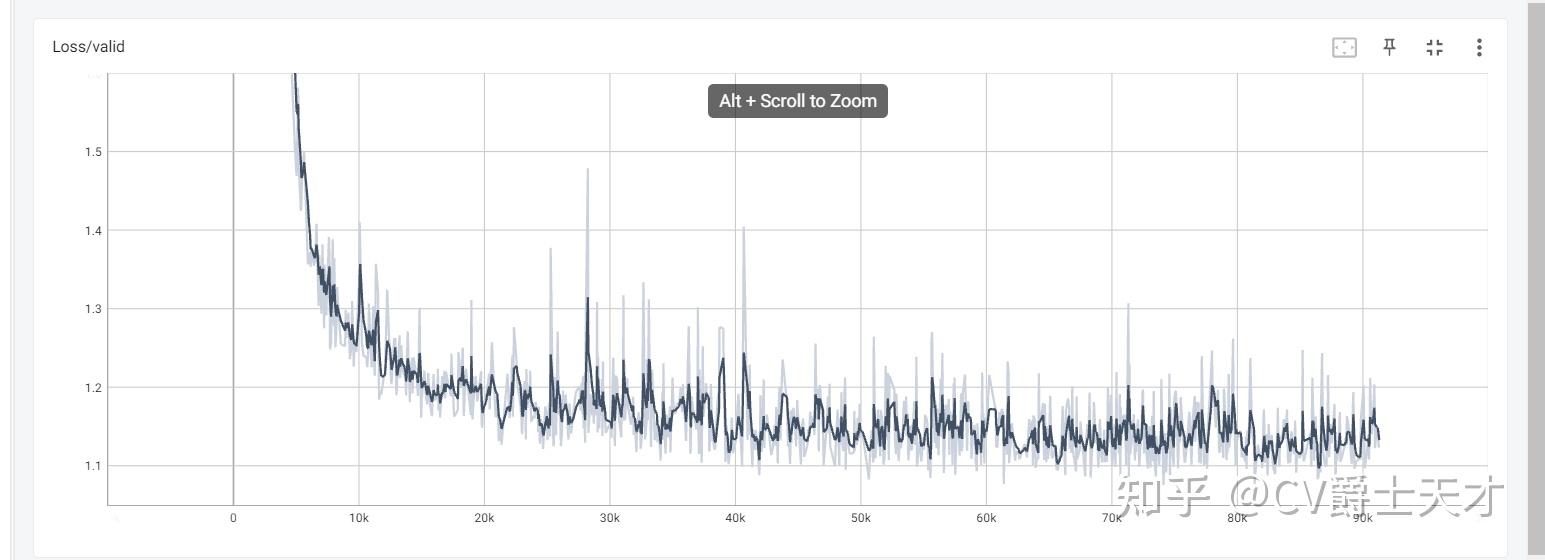

writer.add_scalar('Loss/valid', mean_valid_loss, step)

# 如果验证集的平均损失值小于最小的损失值,则更新最小损失值,并保存最好的模型,停止步骤设置为0,否则停止步骤+1

if mean_valid_loss < best_loss:

best_loss = mean_valid_loss

torch.save(model.state_dict(), config[&#39;save_path&#39;]) # Save your best model

print(&#39;Saving model with loss {:.3f}...&#39;.format(best_loss))

early_stop_count = 0

else:

early_stop_count += 1

# 如果训练400次损失值没有提升,则停止训练

if early_stop_count >= config[&#39;early_stop&#39;]:

print(&#39;\nModel is not improving, so we halt the training session.&#39;)

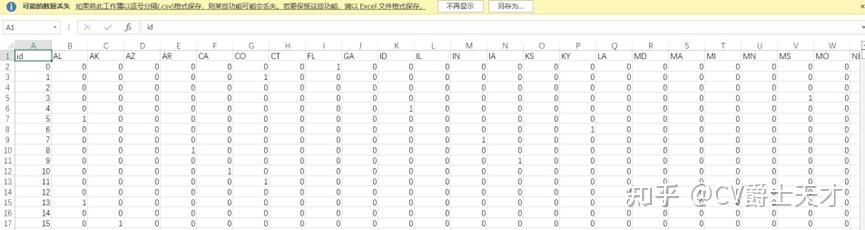

return测试

测试过程

# 测试

model = My_Model(input_dim=x_train.shape[1]).to(device)

model.load_state_dict(torch.load(config[&#39;save_path&#39;]))

preds = predict(test_loader, model, device)

save_pred(preds, &#39;pred.csv&#39;)

# 预测函数,测试数据, 模型, 设备

def predict(test_loader, model, device):

# model = My_Model(input_dim=117).to(device)

model.eval() # Set your model to evaluation mode.

preds = []

with torch.no_grad():

for x in tqdm(test_loader):

x = x.to(device)

pred = model(x)

preds.append(pred.detach().cpu())

preds = torch.cat(preds, dim=0).numpy()

return preds

# 将预测结果写入文件

def save_pred(preds, file):

&#39;&#39;&#39; Save predictions to specified file &#39;&#39;&#39;

with open(file, &#39;w&#39;) as fp:

writer = csv.writer(fp)

writer.writerow([&#39;id&#39;, &#39;tested_positive&#39;])

for i, p in enumerate(preds):

writer.writerow([i, p])测试结果

CV爵士天才:传染病传播预测模型 |

|